So why talk about my home lab? Every once in a while when talking to colleagues at work or just in the industry in general, I mention it

and then get asked for details. Instead of digging through various photos on my phone taken at various points throughout the year, I have decided to just make

a blog post covering my home lab’s current configuration and setup. This lets me just share a single link that covers everything.

Disclaimer: I’m going to include a lot of sources because I expect half my audience to have lived only in the DevOps world, and the other half only in the on-prem world.

None of the links I provide to products or services are endorsements (paid or otherwise)!

I am simply trying to be complete in listing what I have here for educational purposes only.

What do I even need a home lab for?

Home labs serve a few purposes. The biggest one is education. I have a cost up front environment that I can use

for years without worrying about expensive recurring cloud bills.

Another great reason is resource availability. I’m a big fan of home automation, and consumer network hardware struggles with networks that have lots of devices on them. “Prosumer” and enterprise grade

hardware helps greatly with heavy or busy network loads and is generally far more reliable and performant. I’ve burned through a lot of “gaming routers” before settling on an enterprise

appliance.

My final and most important reason is that at this point, I need a local environment that is powerful/complex enough to do things that I as an engineer

are accustomed to having the ability to do during the day. My day job workflows have spilled over in to my hobby environment to the point where not having the environment feels

like an outage.

Why I consider my home lab to be a prod environment

For years now I’ve shied away from rebuilding my home lab from scratch. The entire environment serves purposes and services that I rely on. Additionally,

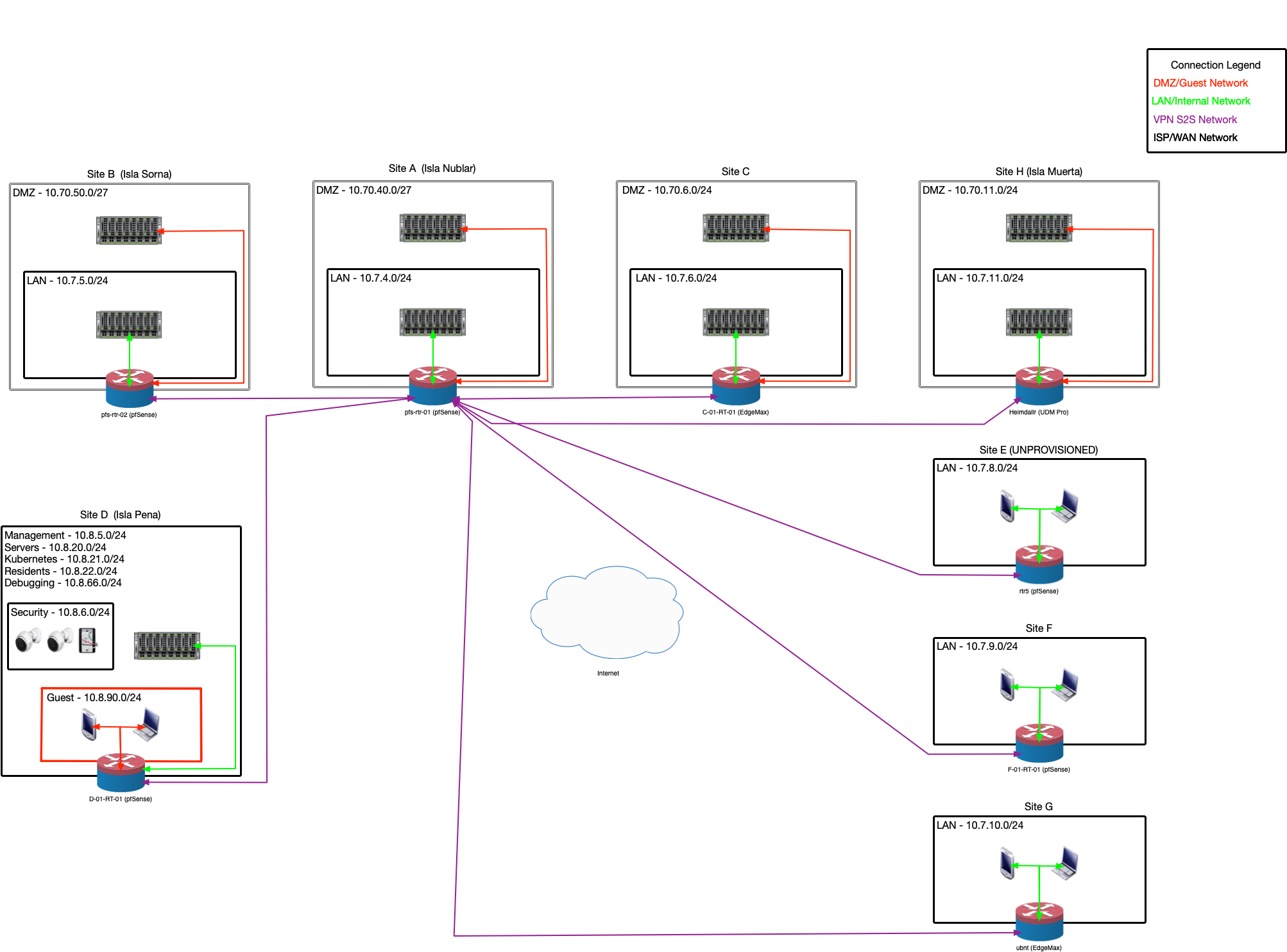

friends and family have also come to rely on the services I host. My home lab is part of a Site-to-Site VPN network that spans 7 separate homes. Each location

hosts some type of service or backup service that we all tend to use. Rebuilding my home lab would be a very disruptive event. Our network has reached the point of stability

and criticality that we have (informal) change control discussions before any changes can happen.

This does not mean I’ve lost control of my home lab. I can run it how I see fit, but certain network layers and infrastructure I’ve agreed to host (*cough* AD *cough*) have

uptime and design requirements.

The hardware I chose

My home lab recently underwent a huge networking upgrade. After a long time of saving and planning I have moved the core of the network to 10gb. This was not a cheap build, and I am fortunately quite happy with it.

|

|---|

| Network Rack Front |

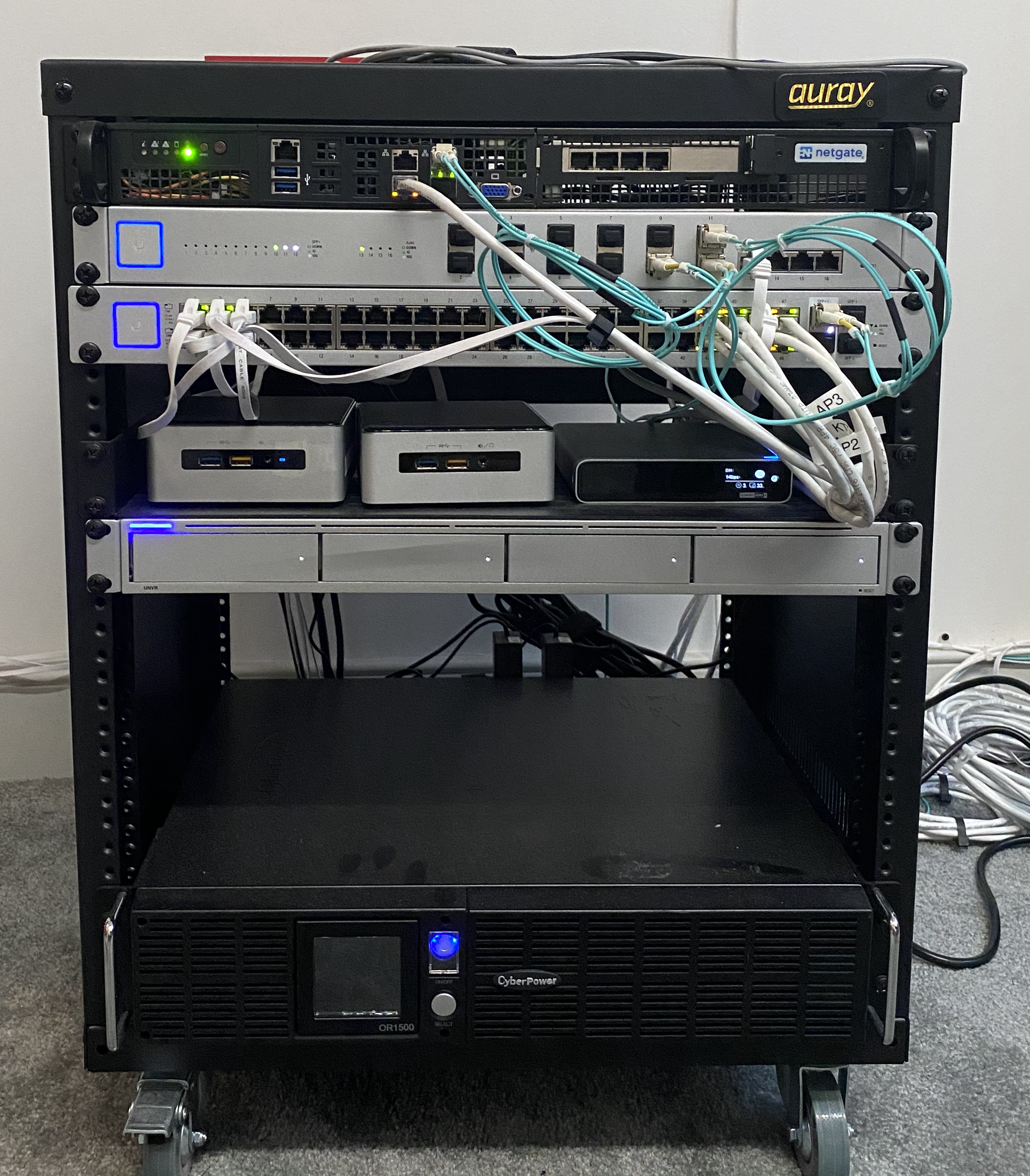

- For a rack I chose an Auray 12U A/V equipment rack1. It’s not something I would buy again. The build quality is poor. I had to return two racks. Also, it’s only half depth. This is something I originally wanted, but trying to build a half-depth NAS is not easy and makes me regret this decision.

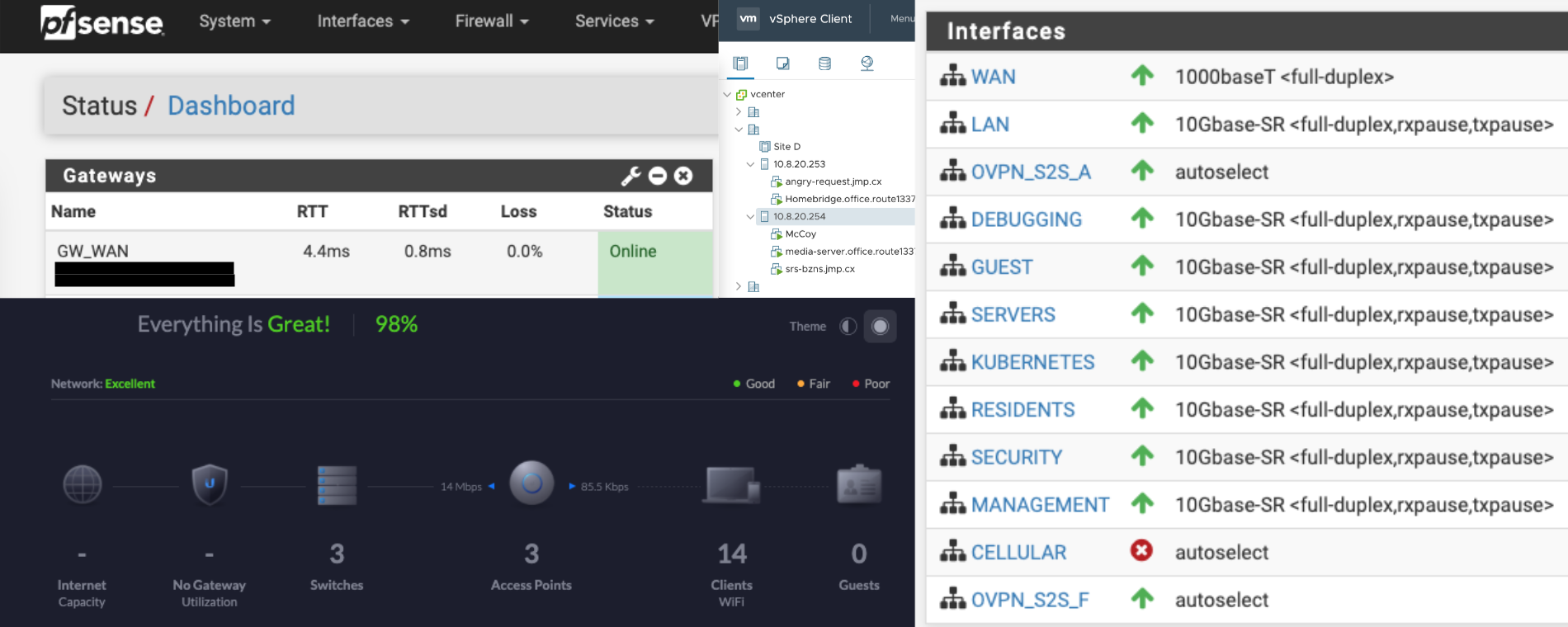

- If you look at the server in U1 you’ll see my router. The router I went with is Netgate’s XG-1537 1U server2. It’s a custom SuperMicro build with dual 1Gb ethernet ports and dual 10Gb SFTP+ ports. I also added an expansion card for 4 more 1GbE ports for testing. This appliance comes preloaded with pfSense.

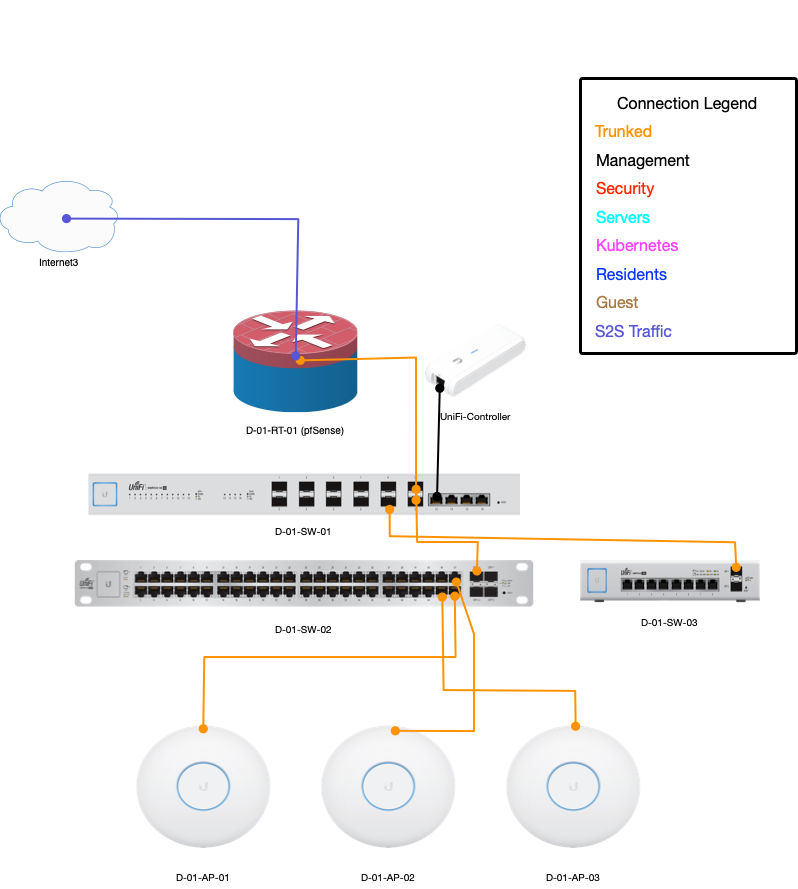

- The device sitting in U2 is a Ubiquiti UniFi US-16-XG3 fiber switch. This is my core switch. The UniFi Cloud Key+ is directly connected to it as well as two more switches. All 16 ports on this switch are 10Gb. The end goal is that a few servers will also be directly connected to this switch for a storage VLAN.

- The switch sitting in U3 is a Ubiquiti UniFi US-48-500W4 switch. This is a 500W PoE switch with 48 1GbE ports and 2 10Gb SFP+ ports. I use this switch as the primary data switch in my environment. Right now all servers, security cameras, and wired workstations directly connect to this switch.

- Not pictured there is also a Ubiquiti UniFi US-8-150W5 switch. This is a 150W PoE switch with 8 1GbE ports and sadly 2 1Gb SFP ports. This switch is used for equipment in my entertainment center where the switch itself is located. It’s unfortunate that the switch’s uplink to the core switch is not 10Gb but there wasn’t a good reason to get a more expensive switch in this spot.

- U4-5 are taken up by a shelf that two Intel NUCs6 sit on along with a Ubiquiti UniFi Cloud Key Gen2+7

- The NUCs run VMware ESXi for hosting classic virtual machines.

- The Cloud Key runs the UniFi network controller software that manages all of the UniFi equipment.

- U6 houses the Ubiquiti UniFi Protect NVR8. The NVR contains 4 8TB Western Digital Purple drives in RAID 5 and stores the footage of my UniFi Protect surveillance cameras. Right now I have 4 interior cameras and 1 UniFi Protect G4 Doorbell9. All 5 cameras are set to continuous recording with motion events and human detection flagged for quick review.

- U11-12 contain a CyberPower 1500VA/900W Simulated Sine Wave UPS10. All of the equipment connected in the rack is plugged in to the UPS.

- Not pictured; there are 3 Ubiquiti UniFi AP AC Pro11 access points mounted on the ceiling in several rooms. Ceiling mounted APs were not part of the plan for a 2 bedroom apartment, but we have a lot of local interference from neighbors, and a nearby airport that mandated this design.

The software I chose

Some of my home lab software choices were mandated by the collective Site-to-Site VPN I’m a member of. Others are just personal choice.

- For the router I chose to run Netgate’s pfSense12. I really like pfSense as a router/firewall platform. I’m not going to lie and say I wouldn’t prefer to have “nextgen firewall” features in it for “zero trust”, but that’s not a top priority for me, and it will come eventually. This appliance has enough power for demanding network traffic and high speed VPN service with cryptographic functions offloaded to the hardware.

- For servers, I’m currently using those two Intel NUCs to run VMware ESXi13. Originally I chose Intel NUCs for ESXi because they were quiet, low power systems

that could be kept in a coat closet in a 700sqft 1 bedroom apartment.

ESXi itself was chosen because an ESXi cluster connected to a central vCenter server is a standard network requirement for the Site-to-Site VPN. Some core services every site is mandated to host are kept in the ESXi cluster.- As for those core services, I run two Windows Servers with Active Directory and DNS services. These servers provide DNS and DHCP to every VLAN. (No client devices are bound to AD)

- I also run several Ubuntu LTS servers for things like Dockerized NginX and Homebridge14. Most services my home uses are in Docker15 containers now. At some point I am planning to build a pair of 1U servers that will take over running ESXi.

Internet Service

For a home lab with this kind of internal network speed, and with the work I do during the day, I could only go with a fiber provider. In fact a deciding factor when I look for places to live is if a gigabit fiber ISP serves the location. This is a mandatory thing for me regardless of home labs.

- Primary ISP: Currently I have a symmetric gigabit fiber connection with 5 static IPs provided by Verizon FIOS16

- Secondary ISP: I’m currently planning cellular failover for specific VLANs in order to keep security alerts and controls remotely accessible should my primary ISP go down. I have not chosen a cellular provider yet.

Why not Kubernetes?

Instead of running several standalone Ubuntu servers with Docker, why not run Kubernetes? I’ve asked myself that a few times, and the answer is really simple.

At the moment I don’t feel like maintaining a Kubernetes cluster at home, until I have more hardware resources. I want to add some backend storage, and replace the NUCs

with 1U servers first.

Tying things to the cloud

Being a DevOps Engineer/SRE by trade, I definitely can’t get away with so much legacy technology without a little cloud seeping in. The legacy tech such as ESXi and Windows Server AD serve some core requirements like hosting VMs and authentication, but that’s only because the cloud equivalents are far too costly. I do also aim to keep my entire home lab independent of the internet. No internal service should break due to an external service going down. With all of that in mind, I do have some active production use of the cloud.

- I use AWS17 for external facing DNS for several websites. This website is hosted on AWS as well.

- Google Workspace18 is another cloud provider I pay for. My family has a custom email domain, and we share calendars and other Google managed resources via this domain. Google Workspace has been well worth the monthly price for what it provides even in a consumer use case.

- I do use JumpCloud19 and Duo20 for two-factor authentication to the edge of my network. OpenVPN users will need a JumpCloud account with Duo push on their phone to login to the Residents VPN. This was done to help protect the edge of my network from brute force attacks.

I decided against hosting my own website and/or email at home because my personal/professional presence online needs more uptime than a small business ISP can provide.

I did not want to risk a home lab outage to cause me to miss important email, or harm my professional presence as an engineer.

Fortunately I have had no unplanned outages unrelated to my ISP since the lab went online when I moved in to this apartment.

(As of the original publishing date of this blog post.)

The network layout

The network layout is a little more complex than most home labs because I come from a security background and prefer segregation of services at the VLAN layer.

- The Management VLAN is where all network hardware management interfaces sit. Nothing can access this VLAN except admin workstations via a VPN. This VLAN has a hole punch configuration for traffic both in and out with a “deny by default” firewall.

- A Security VLAN with similar hole punch measures is where all surveillance equipment, door locks, and smart safes sit.

- The Servers VLAN is configured to allow all traffic out but all inbound traffic is a hole punch.

- The Kubernetes VLAN is configured the same way as the Servers VLAN, however no infrastructure is running there at this time.

- The Residents VLAN is another all traffic out/hole punch in VLAN, however no holes are ever permanent.

- The Guest VLAN allows content filtered traffic out to the internet, but has no incoming or outgoing traffic with any VLANs on the network. It is also unable to access any resources located at other sites on the Site-to-Site VPN.

Only the Servers, Kubernetes, and Residents VLANs are broadcast over the Site-to-Site network via BGP.

As discussed above I’m using a mix of vendors for network hardware. The router is a pfSense appliance on customized SuperMicro hardware provided

by Netgate. The rest of my network hardware is Ubiquiti UniFi equipment. I could run a UniFi Dream Machine Pro (UDMP), and they do interest me, however UniFi is not there

yet as a platform in regard to router features. For example, I have 5 static IPs and the UDMP only supports a single WAN IP. It has a pretty UI for DPI/IDS, but I can use

open source tools to make pretty UIs for this information, and fine tune the configuration in pfSense. This gives me more control as opposed to just simple toggles in the UDMP. I also needed control of BGP

which I could not get with the UniFi routers, but OpenBGPd on pfSense can and does provide this for me with a simple configuration file.

I use UniFi hardware for the core fiber switch, the data switches, and the APs. As I mentioned above, I also use UniFi for surveillance hardware. A bad firmware release has me

cautious these days with regard to updating this hardware. I tend to update only for security fixes to avoid unnecessary, and frankly frequent issues with Ubiquiti’s bad releases. UniFi burned me pretty bad with

a poorly QA’d AP firmware that caused a lot of random disconnects across all wireless clients. Rolling back 3 APs via SSH at 2am is a good way to ruin trust in updates. Once on an actually stable firmware, the hardware is rock solid though.

|

|---|

| Home Lab Physical Topology |

The Site-to-Site VPN started small between just two of my friends a long time ago. I was the 4th home to join the network, and am now the third largest service provider on the network.

Four of the locations on the Site-to-Site network provide and consume services, while 3 locations only consume services. As a group we strive for specific standards and discuss and approve (or deny)

changes to those standards.

My lab is far more complex in network design than the other sites, via the VLAN segregation, but other than that I host redundant, and unique services according to our collective

standards. I also host some services for myself that are not shared across the network. (As all sites tend to do)

|

|---|

| Site-to-Site VPN High Level Topology |

Plans for the future

I have several future plans for my home lab but no specific dates are set. Time, money, and a desire to do more after hours work are all limited resources at the moment. Briefly my future plans in no particular order are currently as follows:

- Cellular Failover: This is quickly becoming necessitated by Verizon’s recent issues with uptime. I’m actively looking in to providers as well as hardware for this.

- Central Storage: At some point I want to build a storage array and attach it to the core switch via a 10Gb SFP+ connection. It would serve as the central storage for VMs, Kubernetes resources, and end user files. This is a necessity to expand and upgrade VMware as well as for moving to K8s.

- Replace the NUCs: Once I have a central storage solution I plan to replace the NUCs with 1U servers that have far more performance. This will allow me to host Kubernetes as VMs inside ESXi or even directly on ESXi using the new vCenter 721

- Replace UniFi: Ubiquiti has had frequent issues in 2020 with QA regarding firmware releases, and most recently, they released an update that now mandates cloud access to configure and manage the Cloud Key Gen2+. While I’m fine using the cloud for some things, I draw the line at cloud-free appliances becoming cloud-mandated via firmware updates. That and the general fear of software updates breaking things across their entire product line has me rethinking both networking and surveillance. For now I am keeping this freshly purchased hardware on isolated VLANs while I hope they clean up their act.

- ARM Systems: I’m toying with the idea of running a Kubernetes cluster on ARM hardware. I also might get an Apple Silicon Mac mini for XCode build CI/CD pipelines. The Mac mini really depends on if I ever get around to learning Swift. I have more important programming goals at the moment.

-

The two Intel NUCs are older variants and slightly different models. ↩